·

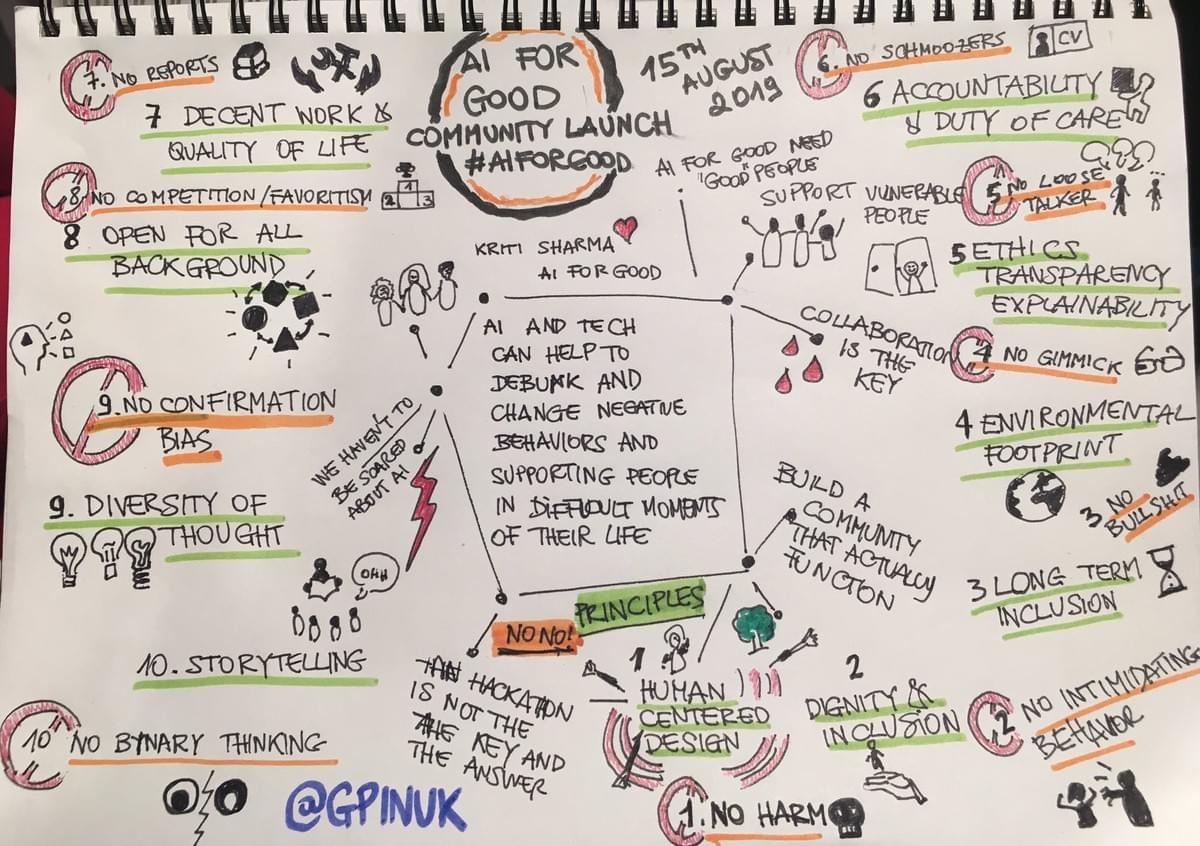

- Human-centered design — put humans at the heart of your product design process and make sure their needs, accessibility and technical feasibility are aligned; poor design leads to poor adoption and in our case also to negative unintended consequences.

- Dignity & Inclusion — remember that we create solutions not for an average user or a beneficiary, but our planet and fellow human beings; these people shouldn’t be treated as “dependents” that can be left to despair if the funding stops; they are entitled to the same public goods and services just like you and me, so we must include them in the design process by carefully listening and empathising with them as if they were our relatives; maybe they are underserved but not completely disabled, they have all the rights and intentions to be able to help themselves, so we should treat them with respect and dignity; share our knowledge on how to become self-sufficient, and of course lend a helping hand but as equals; we all deal with tough situations throughout life and they will do the same for you when you’ll need help.

- Long term commitment to impact — look for the appropriate scalable business model and sustainable impact strategy; a short term intervention may produce good results for your shareholders, but the impact of it after you leave can take a twist and affect people in the wrong way; don’t get in this business using powerful and expensive technology just to make a name or quick profit; either don’t overpromise or make sure there is a durable plan to stay on the ground as long as necessary to make lasting positive impact.

- Leave no trace or environmental sustainability — remember that AI is not only costly but data and energy greedy; be cautious about water and energy sustainability or the carbon footprint your project may leave on the planet; for example, by using a smart algorithm to divert the traffic to lower air pollution in one area wouldn’t solve the problem of pollution, it will just move it away and make other areas more polluted.

- Ethics, transparency, explainability — whatever we build, it’s our responsibility to make it as transparent as possible and communicate how it works to all the stakeholders; we also have to conduct data privacy and algorithmic fairness assessment and plan for any unintended consequences.

- Accountability & duty of care — make sure to fix any issues in your product in a timely manner; iterate based on the feedback from end-users; keep your models up to date using the latest data, because the behaviour is changing over time and you need to factor that in your research and training; most importantly, be prepared to take the blame if your product fails to deliver what you promised.

- Decent work & quality of life — for your teammates and collaborators, ensure equal opportunities and fair income, security in the workplace and social protection; it’s also important to protect their mental health, for example, labelling data on domestic abuse or suicide attempts can have a very negative effect on the person who performs this labelling.

- Open for people from all backgrounds — not just ML engineers and data scientists, but anthropologists, humanitarians, designers, communicators, storytellers, doctors, teachers, etc.; the most effective project teams consist of people with complementary skills and behaviours that are committed to a common objective, so the only thing that matters is your commitment to action on AI for Good.

- Diversity of thought — without diversity there is no progress or innovation; we are for gender, race, sex, orientation, ethnic, socioeconomic and age diversity but not just for the sake of ticking the box; we genuinely want to learn from new voices, views and experiences that haven’t been heard before and to find new ways of tackling long-standing issues; we believe in unique mindsets and creative freedom and are here to actively listen and learn from them.

- Storytelling — we are educators that can also tell a story using plain language to explain complex concepts; why is it important? How our technology might help solve it? Are there other alternatives? What’s the impact of our technology? How it can be scaled or fit into some other problem space?

Our prime purpose is to help others and if you can’t help them, at least don’t hurt them. — Dalai Lama

Big no-no’s in our community:

- No harm — don’t build anything to harm people even if they are nasty politicians and by all means anticipate and mitigate negative unintended consequences.

- No intimidating behaviour — we have a strict “no as…holes” policy or to put it simply just be nice or leave; even if you are a nice person you might sometimes feel a bit smarter or in some way superior to the others, but please keep it to yourself; no one is here to listen to your condescending preach, patronising tone of voice or mansplaining; we are here to get inspired, learn from each other and try solving problems.

- No BS — no fake news, fake skills or fake AI for Good projects, we are not a vehicle to make it look real; we are seeking practical approaches, people that can deliver them and showcasing what actually works.

- No gimmick — we don’t need to apply AI or another emerging tech just for the sake of tech; we don’t need anymore pseudo-solutions, trendy but pointless technologies just drag attention and funding away from the problem area; solutions by definition should address the root problem.

- No loose talkers — we are a community of doers that prefer action over another long debate about the problem; we are here not to waste a lifetime contemplating over ideas and barriers to implementing them, but to solve some of the toughest challenges facing humanity, so that the next generation can keep enjoying life on our planet.

- No schmoozers — we will not tolerate people that came here only to poach talent from other companies or offer their consulting services, unless you are doing it for a good cause.

- No reports — we are not a think tank and are not going to produce any scientific papers or research reports as a group, perhaps only on the individual level and then we can just highlight something that has already been written.

- No competition or favouritism — we have to respect and treat each other as equals; no one person/project in our community here is better than the others and each deserves attention, especially given that they are all coming from different social areas or AI applications.

- No confirmation bias — even though it’s very hard given that we never have enough time, we would like to avoid affirming our prior beliefs and jumping into quick conclusions, let’s try to understand the whole picture of the problem, look at it from all the different angles and try to consider them in your design process.

- No binary thinking — let’s just avoid oversimplifying and splitting the world into two polar systems: right or wrong, innovation or ethics, etc.; it’s always nice to have a constructive debate over something but this leads to polarisation and hence the problem-solving paralysis, so let’s use critical thinking instead and challenge our own opinions.

This is the first draft and by all means please let us know if you have any questions, comments or objections. Your feedback is very important to make sure these principles are interpreted accurately and made actionable. We hope this approach will help you prepare to start on the #AIforGood journey!